Oil and gas companies have long stuck to antiquated systems. There is a need for wholesale evolution, innovation, and diversification, but we must look closely at companies that retain workflows untouched by digitalization. Whether reflective of the lack of a tech savvy workforce, aging employees, or corporate disdain, many oil and gas companies have lingered in their transformation into contemporary companies. However, over the past two decades, the industry did begin to understand that data collection was desirable. Yet the disconnect between what is desirable and what is required results in poor collection frameworks and a decided lack of data hygiene – i.e., collective processes that ensure the cleanliness of data which is important for usage versatility. Artificial Intelligence (AI) could be the avenue by which the resources industry evolves, leveraging historical data, building new forms of value, and attracting a new generation of talent. But, in order to make use of the promise of AI, companies must ensure that the data they capture is useful, flexible, and machine-readable.

“Dirty data,” meaning data that is poorly structured, incomplete, rife with duplicates, improperly parsed or simply wrong, is a considerable challenge for organizations of any size. Mike Roberts, VP of AI/ML at Hypergiant, positions it as such, “In our experiences with oil and gas companies, we encounter data that is collected routinely, but rarely accessed and, as a result, may not be subject to the kind of iterative feedback that would ensure its quality. We encounter data collected via traditional means, like paper forms, and stored in a semi-digital state, like scanned images. And we encounter data fragmented across a variety of data storage systems, often lacking keys to tie the records from those various stores together. Digital transformation is more than simply digitizing analog information; it’s creating a complete and coherent data ecosystem that data science can plug into.”

One critical understanding that must become more pervasive in the industry is the difference between data and information. Data is raw, unprocessed, and can appear random, truncated, and lacking in organizing principles. However, information is data that has been processed, structured or presented in a way that incorporates context, thereby making it useful.

Upstream oil and gas companies have been contending with immense data sets and colossal files for years. But one of the unique shortfalls of the industry is the ephemeral nature of collected data – too often it is discarded, ignored or only analyzed in a cursory fashion. Oil and gas do not always view data as a resource with value and instead can be dismissive of its worth; data is often viewed as a physical asset that is largely descriptive. That is one of the dirty secrets of the industry: most data goes unused despite prevailing analyst sentiment that “data is the new oil.” Unknown and unused data – dark data – comprises more than half of all data collected. Lucidworks indicates that 7.5 septillion gigabytes of data are produced every day and only 10 percent of it is utilized significantly.1

The resources industry needs to apply Big Data philosophies to analyze and structure collected data without leaning in to messy and imprecise practices. Almost two decades ago, IBM articulated the “Three Vs” associated with Big Data2 – volume, variety, and velocity and, since those were coined, others have identified two additional Vs: veracity and value.

Volume is easily understood. The central problem is not a lack of data, which, by almost any account can be considered “big” – seismic data centers can contain upwards of 20 petabytes of information, roughly 926x the size of the U.S. Library of Congress. In offshore seismic studies, narrow-azimuth towed streaming (NATS) gathers data to visualize geological characteristics and wide azimuth (WAZ) captures an even greater amount of data to produce higher-quality images.3 But this additional data still requires processing and analysis to be transmuted into useful information. Even absent notoriously large data sets (like these), oil and gas companies are generating more data than they did previously. Globally, some analysts believe that the total amount of digital data is doubling every two to three years.4

Variety is one area in which evolution is necessary. Too many aspects of drilling and well-maintenance are stored in the heads of individuals at organizations as “tribal knowledge.” Naturally, such knowledge is largely contextual and is able to be accessed only under certain conditions. It is by excavating that information from the heads of experts and then leveraging such information to move toward digitalization of paper processes that advancements will be made.

Velocity focuses on the speed of data processing. Reduction of the time commitment required to transmute data into high quality information was estimated to produce an average savings within the industry of five million man-hours for a mid-sized company with 6.2 million records.5

Veracity and Value go hand-in-hand. Data value only exists for accurate, high-quality data and quality is synonymous with information quality since low quality can perpetuate inaccurate information or poor business performance. The imperatives that underlie accuracy are twofold: safety and environmental implications exist for poorly made decisions and most major oil and gas companies are, especially now, focused on optimization and balancing their business model in the most cost-effective manner – whether by reducing expenses in exploration and production, by maximizing production through Wells, Reservoir, and Facilities Management (WRFM) plans or through extending plant life.6 Gartner has indicated that poor data quality may be behind up to 40 percent of failing initiatives across industries and indicates that data quality can affect labor productivity by up to 20 percent.7 As industries drive toward increased automation, data quality can be a major limiting factor.

Thus, data quality becomes the central challenge that occupies up to 80 percent of a data scientist’s time.8 To take advantage of artificial intelligence, organizations must understand the dual needs: they need the data to be right and it must be the right data. Most data currently fails to meet the first criteria due to poor measurements, complicated processes for collection and usage, or good old-fashioned human error. As for the “right data,” ethical positions around certain information (e.g. facial recognition data) and underlying bias mean that what might appear on the surface to be “right” is compromised. Harvard Business Review (HBR) recommends four person-months of data cleaning for every person-month spent building a given machine learning model. Data must be analyzed for quality, sources assessed, de-duplicated, and cleaned before use. Elimination of root causes can minimize the need for ongoing cleaning. Further, HBR recommends maintenance of an audit trail, clearly identified ownership and governance, and rigorous quality assurance to ensure that data completes the transformation into information and becomes viable for leveraging AI.

In conclusion, oil and gas organizations face many of the same challenges as other industries when it comes to utilizing data for artificial intelligence pursuits. But past decisions, internal risk aversion, and anachronistic workflows make data cleanliness even more difficult to achieve. Carving out a small aspect of the business for an artificial intelligence proof point may be necessary to demonstrate the value in a clear, tangible way. It is only by accompanying results with appropriate value calculations that data hygiene will be viewed as a necessary component of business. But oil and gas companies can be sure that some competitors have already convinced their leadership: each day that passes is a day spent remaining ill-prepared for the future.

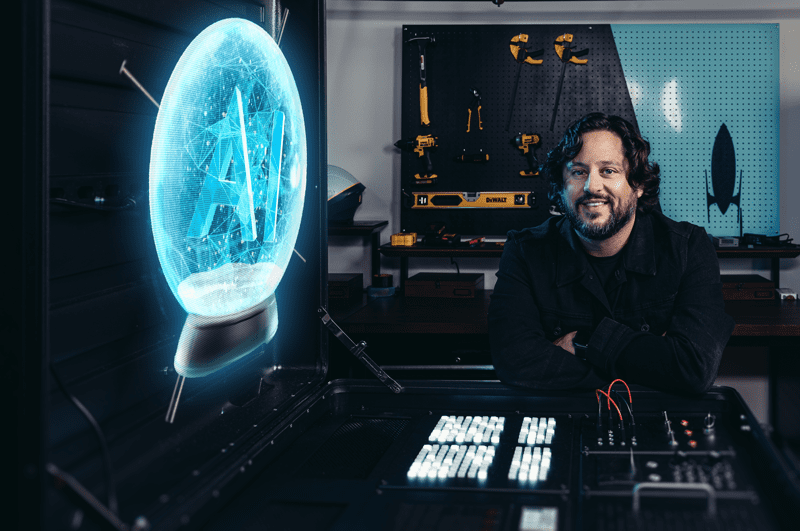

Headline photo: Ben Lamm, co-founder, executive chairman, and CEO of Hypergiant

1 – “Dark Data and Why You Should Worry About It,” by Ian Barker, Betanews.com, 2016.

2 – “The NoSQL conundrum: Lagged veracity and the double-edged promise of eventual consistency” by James Kobielus, IBM Big Data and Analytics Hub, December 5, 2013.

3 – ‘Big Data analytics in oil and gas industry: An emerging trend’ by Mehdi Mohammadpoor and Farshid Torabi, Petroleum, December 1, 2018.

4 – ‘Big Data in the Oil and Gas Industry: A Promising Courtship,” by Michelle R. Tankimovich, University of Texas. Thesis, May 4, 2018.

5 – “The Big Data Imperative: Why Information Governance Must Be Addressed Now” by Aberdeen Group, December 2012.

6 – “Data Quality in the Oil & Gas Industry,” by Emmanuel Udeh, ETL.com.

7 – “Measuring the Business Value of Data Quality,” by Ted Friedman, Michael Smith, Gartner Research, October 10, 2011.

8 – “If Your Data is Bad, Your Machine Learning Tools Are Useless,” by Thomas C. Redman, Harvard Business Review, April 2, 2018.

Ben Lamm is a serial technology entrepreneur dedicated to making the impossible possible. He builds intelligent, disruptive software companies that help the Fortune 500 innovate with breakthrough technologies. Ben is the co-founder, Executive Chairman, and CEO of Hypergiant, the office of machine intelligence Industries. Previously, he was the founder and CEO of Conversable, the leading conversational intelligence platform acquired by LivePerson, the founder and CEO of Chaotic Moon Studios, the global creative technology powerhouse acquired by Accenture and co-founder of Team Chaos, acquired by Zynga.