An effective AI platform needs a reliable master data strategy and cloud computing source to build upon, as well as sound data integration and cleansing for optimal performance and outcomes.

Over the last few years, adoption of artificial intelligence (AI) by companies has surged 270 percent. Companies are pushing to invest more in AI technology and this trend is only going up. Despite the eagerness, however, AI applications are not simply plug and play. Just like construction, laying a solid foundation first is crucial before anything can be built upon it. A reliable and sound master data strategy (MDS), cloud computing capabilities, and data integration and cleansing are all part of the foundation AI must be built upon.

How Does My Master Data Strategy Connect With AI?

Before discussing the connection between an MDS and an AI platform, let’s first define MDS and why its purpose is key to AI implementation. An MDS is how a company organizes its data and what gives it meaning or context to transactions and analytics. Master data can be internally or externally defined. It provides the who, what, when, where and why relating to business processes.

An effective MDS is crucial to implementing an AI platform, as data is the fuel that powers AI analytics. As major oil and gas companies formed different segments of their enterprise based on geographic locations, operational lines, or even specific refineries, they inadvertently created data silos. These data silos can trap data and may classify the same object with different names.

An MDS should incorporate data from these silos, determine universal naming conventions, and clean data of duplicates and erroneous data. This drastically reduces headaches and delays during the AI implementation process by avoiding the daunting task of digging through data to determine where and why the AI application failed.

Why Is Cloud Computing and Storage Beneficial to AI?

The emergence of Amazon AWS, Google Cloud, and Microsoft Azure has led to an explosion of different use-cases and capabilities. Cloud computing and storage are incredibly beneficial to an AI platform because of its real-time data collection, data elasticity and global availability. The scalability of the cloud allows companies to increase data storage, and, therefore, enables them to grow on a global scale, particularly as their computing power needs expand over time.

The cloud allows for IoT devices at remote oil wells to constantly upload data for faster compilation and AI analytics than ever before. Data scientists currently spend about 80 percent of their time finding, retrieving, consolidating, cleaning, and preparing data for analysis and algorithm training. By leveraging the infrastructure provided by a cloud platform, all of these steps can be drastically reduced. Therefore, data scientists can focus on what’s truly important: building and training AI analytical tools to make them perform better, faster and more efficiently.

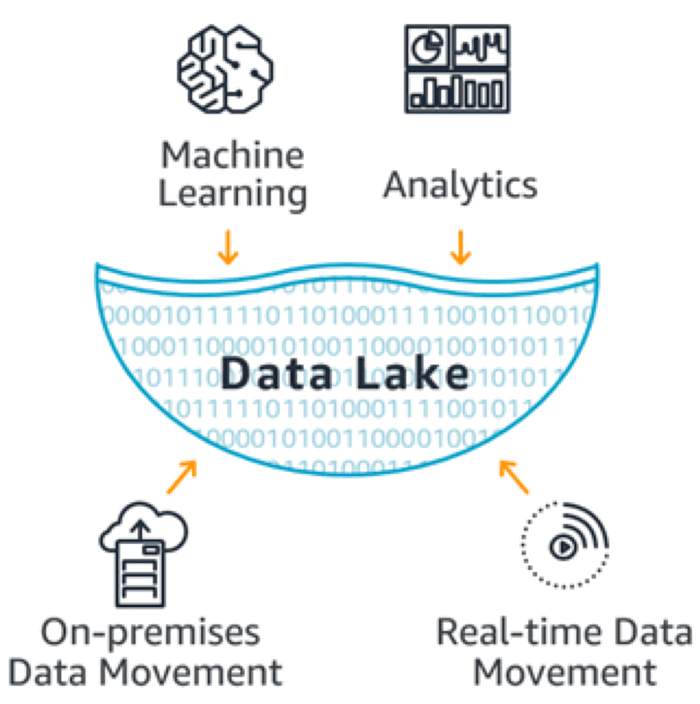

A new breakthrough in cloud computing and storage is the emergence of data lakes, which can store structured and unstructured data, and relational and non-relational data, allowing the collection of raw data from a variety of sources like IoT devices, CRM platforms, and other current databases. Allowing an AI platform access to all this data enables it to run faster, more complete analytics without moving the data to a separate system beforehand. However, similar to planning an MDS, data lakes should be carefully constructed to lend their architecture to better defining, cataloging and securing received data. For example, the data lake platform Snowflake integrates with a company’s current cloud storage and transforms it into an effective, AI-ready data lake.

How Does Data Integration and Cleansing Connect to AI?

Access to clean data is the lifeblood of any AI application. Data integration is the ability to view data from different sources together in one location, and this is needed in an AI project to connect all the necessary inputs to the application. However, in each place the data resides, it may be represented in a different format or coding language, and it may even be separated into silos or in the hands of different business groups with different priorities. To consolidate all of this data into one AI application, a process or computer program must be developed to extract the necessary data, convert it for AI use, and finally integrate it into the AI program.

Data cleansing is sifting through it to ensure that it is:

- Accurate

- Complete

- Consistent across datasets

- Using uniform metrics

- Conforming to defined business rules

In the end, it ensures that your data is of the right quality to support the applications that use it, which is particularly important to AI applications because of the massive quantities of data they consume.

How to Get Started with Data Integration and Cleansing

Data engineers play a critical role in data integration and cleansing, with the goal of integrating data into systems across the company, including AI applications. They typically have experience with SQL and NoSQL databases, as well as other programming languages that allow them to extract and transform data. Additionally, if data is in the hands of different business units, form a cross-business team to lead integration efforts. This ensures that as data is moved between business sectors, each sector gets the data it needs to do its job.

Data must be cleansed on a regular basis, referencing the standards and naming conventions defined by the MDS. This will keep data uniform across the organization and maintain its integrity over time.

Takeaway

AI needs the proper support in order to make the magic happen. The technological foundation provided here will ensure that AI can add value to the business.

The next installment of this mini-series will discuss where and how to start an AI implementation project to achieve a sustainable and effective AI infrastructure.

This is the second installment of a three-part series discussing AI’s potential and critical role in oil and gas, how a company can prepare for an AI platform, and important steps to executing a sound AI implementation.

Headline image courtesy of MIBAR.net

Patrick O’Brien is an intern with Opportune LLP’s Process & Technology group. He isconcurrently pursuing an undergraduate degree in chemical engineering and an MS infinance at Texas A&M University.

Ian Campbell is an intern with Opportune LLP’s Process & Technology group. He is pursuing an undergraduate degree in management information systems with a certification in business analytics at Baylor University.