Perhaps the worst kept secret in oil and gas is that the “calendar-based maintenance” operating philosophy we inherited from our predecessors many decades ago is due for an overhaul. The fear of unexpected downtime – when parts fail before mean time-to-failure – has led most organizations to “over maintain” and often rip and replace assets that still have meaningful, useful life left, which also bloat spares inventory.

Another poorly kept industry secret is what I like to call “crying-wolf syndrome” – plants tripping when they really shouldn’t be. Midstream oil and gas processes are subject to the same “fail-safe” operating philosophies of our parents’ generation, despite the quantum leaps in our abilities to identify and quantify risks. The way this plays out in practice looks something like this: a sensor value is suddenly outside an operating range or not registering, and this causes a train to trip. In reality, it’s the sensor itself that is faulty, and the wealth of other data points we have available makes that clear to any seasoned operator. Nevertheless, the train trips. One LNG executive told me that 80% of their trips in 2019 were avoidable and due to similar faulty sensor reading-related issues. Worse still, the dollar cost of these avoidable trips was over nine figures. The seasoned operator “just knows” that the plant shouldn’t be tripping when looking back at the data, but missed it in real-time because of the hundreds of alarms he was addressing at the same time. Perfect application of AI, right? Let an AI-powered application identify and alert operators of “cried-wolf” scenarios.

Another terribly kept secret in the oil and gas world, but possibly the best kept secret in Silicon Valley, is that AI has promised to relieve the industry of these and other midstream challenges, and has failed miserably. This failure has very little to do with the size and slow pace of the oil behemoths. On the contrary, when the oil and gas top brass of the world sought out Digital Transformation 2.0, they bought into the vision of AI for industry, and they truly wanted it to work. The technology failed them – the PowerPoint claims stitched together by the best and the brightest painted a much rosier picture than the disappointing reality “post implementation.” Let’s find out why.

So, you’re an oil executive, possibly head of innovation, and you want to implement an AI strategy to solve problems that to date have had no elegant solution (think predictive maintenance, sensor health). What do you do? You have a few broad options: build it all in-house or pick an “AI company.” Let’s unpack both options.

A “Build it Yourself” AI Strategy

This is a great approach. Leveraging your existing data scientists, if you have them, or building that capability in-house is probably the best move. Data scientists within the organization often were operators in their previous roles, and by osmosis, have a deeper knowledge of the underlying asset. This will enable them to build a better model that tackles the right problems. Moreover, since they are working in-house, they will often have access to a large data lake that they can leverage and experiment with models. Where this approach fails is in the deploying their models. It often never happens, and the team becomes a backroom function cooking up theoretical solutions. Why can’t they operationalize their models? For the same reason many AI companies fail. It’s a structural problem with ML and applications that we’ll also discuss below.

An Outsourced AI Strategy – What Could Go Wrong?

Hiring an “AI company” too often ends with disappointed oil execs, jaded in-house data scientists, and initially skeptical (and territorial) operators saying, “I told you so.” Why? Let’s examine the typical approach of an “AI company.” They usually provide an AI platform for the client’s use to solve its most difficult challenges. That sounds fair enough, so then what happens? Well, you’d hope for a specific model to be built using the platform, and then voilà, implement the model, get your result – simple enough. How could it go wrong?

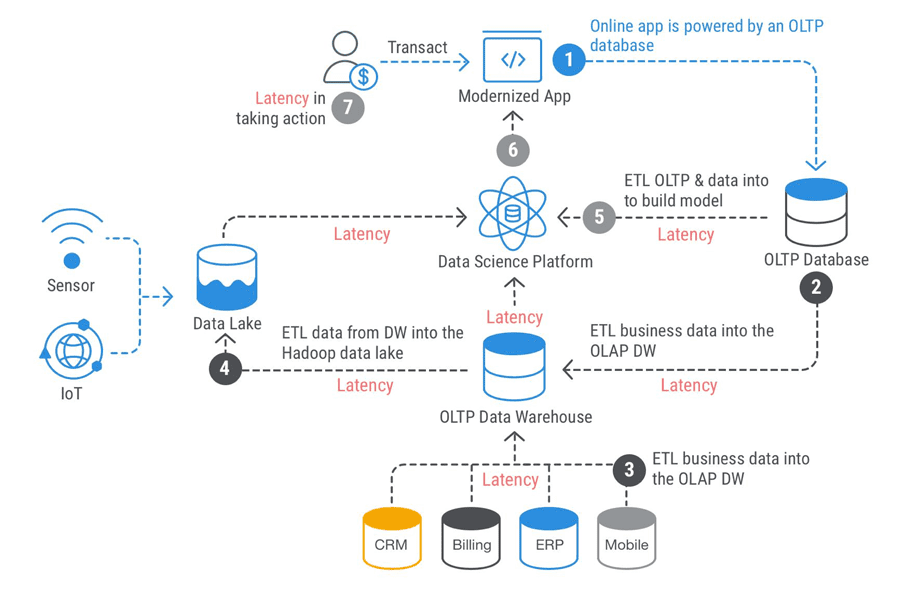

Latency – The Enemy of Successful AI Implementation

Without getting too technical, different types of data live in different databases and in order to do any meaningful analysis, you have to physically bring the data together, often through a laborious ETL process. You likely have purpose-built legacy applications, powered by an operational relational database. The data from that relational database is first moved or ETL’ed into a data warehouse – your first source of latency. Next, you’re probably ETL’ing data from your enterprise applications (think CRM, ERP) into the same data warehouse to consolidate and utilize for meaningful management reporting. That adds further data movement and latency into the process. You may also have a data lake for unstructured and sensor data – a similar ETL process happens with this, too. In order to now utilize a data-science platform to build and train AI models, all that data from your operational database, your data warehouse, and your data lake needs to be brought to that data science platform. The manifestation of all this latency is that our data scientists fail to get the most recent data to build their models, which affects how quickly a model can be deployed into production. While transactions in the real world are taking place, the model you built to predict these events and take appropriate action fails to do so.

What the AI companies will claim is that they can work on top of any solution stack and this may, in fact, be true. But how are they operationalizing their outputs? The diagram above shows a typical lengthy process that’s often hidden under glossy marketing materials. Some “AI companies” took it a step further and wrapped around a nice graphical user interface and tried to win over the non-technical executives. What the data scientists found in these cases: drag-and-drop platforms, no notebooks or access to code, guided “AutoML” black-box solutions (throw in your datasets and we give you an answer). Both operators and data scientists would agree on this one – completely impractical. Aside from the fact that no data scientist would feel comfortable using this, it also introduces a compliance and governance nightmare. With no auditability or flexibility, regulators and insurance companies would take strong objection to this approach. And yet, the overarching issue with all these examples is latency due to the architecture. Data has to be moved from one place to another, and possibly a third location, before any meaningful analysis can be conducted. Once conducted, it is often significantly less accurate than everyone had hoped or promised. Hence, the relegation of AI in midstream to pipedream.

What the AI companies will claim is that they can work on top of any solution stack and this may, in fact, be true. But how are they operationalizing their outputs? The diagram above shows a typical lengthy process that’s often hidden under glossy marketing materials. Some “AI companies” took it a step further and wrapped around a nice graphical user interface and tried to win over the non-technical executives. What the data scientists found in these cases: drag-and-drop platforms, no notebooks or access to code, guided “AutoML” black-box solutions (throw in your datasets and we give you an answer). Both operators and data scientists would agree on this one – completely impractical. Aside from the fact that no data scientist would feel comfortable using this, it also introduces a compliance and governance nightmare. With no auditability or flexibility, regulators and insurance companies would take strong objection to this approach. And yet, the overarching issue with all these examples is latency due to the architecture. Data has to be moved from one place to another, and possibly a third location, before any meaningful analysis can be conducted. Once conducted, it is often significantly less accurate than everyone had hoped or promised. Hence, the relegation of AI in midstream to pipedream.

The structural issues I just outlined have become known as the “last-mile” problem for AI – how to operationalize models effectively. So, what’s the solution? Are we stuck with the statistical software packages of the last generation? Have we reached peak innovation in the oil and gas industry? No. There’s a better way. Why aren’t AI and other analytics being run directly on where the data sets? So far, it’s because of data silos and the limitations of the current database offerings.

So, What’s the Solution?

So, What’s the Solution?

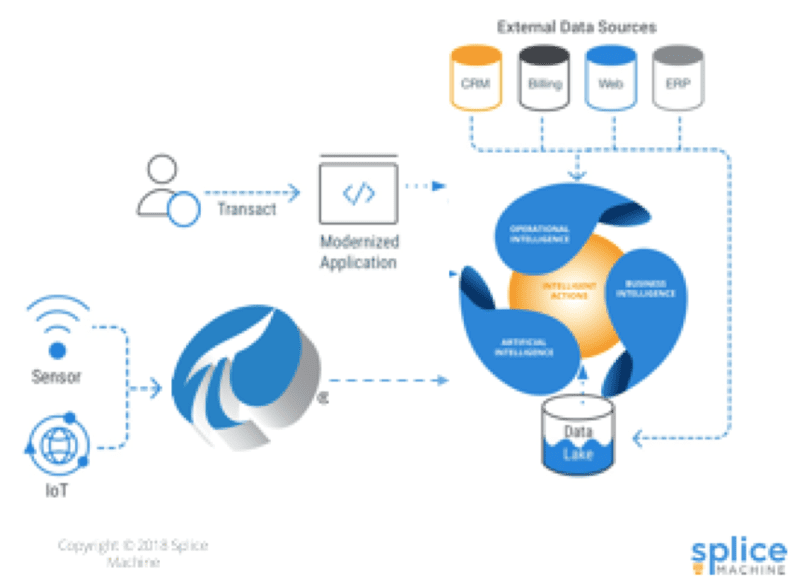

The answer lies in a converged architecture that can natively perform machine learning in the database, alongside the operational and analytical workloads currently powered by legacy databases and data warehouses. This all-in-one database concept avoids the data movement and ETL that creates latency, and also makes it easier for data scientists to work on real-time data.

What if there were a converged architecture that offered a TSDB/OLTP/OLAP/ML all in one database built from the ground up? That would certainly fix the latency problem faced by everyone today and address all the issues the industry has faced with operationalizing AI. On top of all that, if such a solution existed, could it run on premise or in a hybrid environment that most process clients require?

The new proposed architecture combines three elements of computation—operational, analytical, and artificial intelligence—into one platform that scales applications to increase application throughput, stores all information in a fast SQL database, and uses an integrated ML platform to streamline and accelerate design and deployment of intelligent applications. Data science teams are now brought to the forefront, operational teams can trust the models as they are fully transparent, time-stamped, and auditable. Moreover, you can improve your models as operating conditions change. In-database AI can offer a real solution to the latency problem and the ability to operationalize the data science team’s work.

Conclusion

With the above architecture outlined, we can finally deliver on the promise of AI. We can safely push the envelope on outdated parameters. We can increase the accuracy of our maintenance predictions and avoid costly trips and outages. The energy landscape has experienced a tectonic shift—companies are being forced to operate on razor thin margins, yet we are all over-spending on maintenance and avoidable shut downs. With the architecture described, we can finally make good on the promise of AI for oil and gas.

1 Extraction, Transformation, and Load (ETL) scripts are complex computations to take raw data and make it usable for analysis and data science.

2 TSDB-Time Series Database; OLTP – Online Transaction Processing; OLAP – Online Analytical Processing; ML – Machine Learning.

Monte Zweben is the CEO and co-founder of Splice Machine. A technology industry veteran, Monte’s early career was spent with the NASA Ames Research Center as the deputy chief of the artificial intelligence branch, where he won the prestigious Space Act Award for his work on the Space Shuttle program. Monte then founded and was the chairman and CEO of Red Pepper Software, a leading supply chain optimization company, which later merged with PeopleSoft, where he was VP and general manager, Manufacturing Business Unit. Then, Monte was the founder and CEO of Blue Martini Software – the leader in e-commerce and omni-channel marketing. Monte is also the co-author of Intelligent Scheduling, and has published articles in the Harvard Business Review and various computer science journals and conference proceedings. He was Chairman of Rocket Fuel Inc. and serves on the Dean’s Advisory Board for Carnegie Mellon University’s School of Computer Science.